Polycrisis, complex systems and trust

There is something to the idea of a new kind of systemic failure — polycrisis — arising from complexity of human institutions.

Dan Drezner mentioned complex systems in his article on “polycrisis” so that makes it relevant for me to talk about — after all, the raison d'être of information equilibrium is to provide tractable ways to take on complex systems1. I have long noted that the meaning of “complex system” often veers into simply meaning “complicated system” (e.g. here or here) — which in this context would be discussions of “polycrisis” veering into “Events, dear boy, events.”2 So what do people mean by polycrisis?

Definitions?

Let’s borrow the definition from a 2022 working paper by political scientists Michael Lawrence, Scott Janzwood, and Thomas Homer-Dixon that’s been making the rounds:

A global polycrisis occurs when crises in multiple global systems become causally entangled in ways that significantly degrade humanity’s prospects. These interacting crises produce harms greater than the sum of those the crises would produce in isolation, were their host systems not so deeply interconnected.

Call this Definition A. This definition does create a concept that differs from independent “events” coupled with pareidolia (or as Noah Smith puts it: “the polycrisis illusion”) — where “polycrisis” means something different from “omnishambles”. However, a significant issue with this definition is that it relies on a counterfactual that almost certainly cannot be determined with any certainty. “Polycrisis is a bunch of crises that wouldn’t have been as bad if something about them had been different.”

Maybe a different take? There’s another definition from two of the same authors [pdf] (the last two):

We define a global polycrisis as any combination of three or more interacting systemic risks with the potential to cause a cascading, runaway failure of Earth’s natural and social systems that irreversibly and catastrophically degrades humanity’s prospects.

Call this definition B. This paper with Def. B is listed as v1.1 in the filename while Def. A was from v2 — both are from April of 2022, so going to go with Def. A as the update. Def. B is more confused than Def. A. It’s called a crisis but is about risks; only once a risk is realized does it become an “issue” or a “crisis”. In addition this definition doesn’t just rely on a counterfactual, but a comparison of counterfactual futures. How could we know if something is irreversible in a social system?

Drezner offers a “quick and dirty” definition:

[Polycrisis is] the concatenation of shocks that generate crises that trigger crises in other systems that, in turn, worsen the initial crises, making the combined effect far, far worse than the sum of its parts.

Yes, he does say it’s “quick and dirty” but this just seems like interacting crises and I think Lawrence, Janzwood, and Homer-Dixon (and Adam Tooze) are trying to get at something more. Drezner touches upon it when he talks about complex systems:

In tightly wound and complex systems, not even experts can be entirely sure how the inner workings of the system will respond to stresses and shocks. Those who study systemic and catastrophic risks have long been aware that crises in these systems are often endogenous — i.e., they often bubble up from within the system’s inscrutable internal workings.

This feels a bit like (in Cosma Shalizi’s words) “sub-Bayesian” views about entropy — that “water boils because I become sufficiently ignorant of its molecular state”. In this sense, if complexity of a system is high enough I as a human will invariably lose the ability to understand it so catastrophic consequences can arise seemingly out of nowhere.

But there might be something to this. It could be saying complexity is a kind of emergent degree of freedom when there are enough moving parts — analogous to entropy being an emergent degree of freedom when there are enough atoms or molecules. Polycrisis is then a deleterious fluctuation originating in this “field”. Much like it is impossible to point to the actual force acting on a water molecule undergoing osmosis, an entropic force, a polycrisis wouldn’t be attributable to a particular series of events, but rather a state at a higher level of abstraction with a bunch of things going wrong at once.

I think this might be the beating complex systems heart of a useful working definition of polycrisis — one that might even apply to other events in history. The fall of the western Roman empire (see Bret Devereaux parts I, II, and III) might be seen as the slow motion result of polycrisis. Western Europe went from a high productivity, high population state to a much lower one. Plus, it’s hard to attribute the fall to a particular set of events; there are multiple interpretations. Another example could be the late bronze age collapse —a significant decline in wide region of previously thriving cultures without specific causes but rather a variety of changes. I will try to collect these ideas into a unifying concept. But first, a brief interlude on causal emergence, maximum entropy, and dissipative structures.

Causal emergence

Erik Hoel had a paper written up in Quanta a few years ago that I discussed on my blog (archive copy transferred to substack). The underlying tool was “effective information” — the ability or lack thereof for a model to “decode” a set of data efficiently. The idea of causal emergence was that when a system becomes too complex with too many interacting nodes, a new simpler (or, more accurately, a higher effective information) description would arise in terms of new variables and interactions — new effective degrees of freedom. A pictorial representation comes from this figure in one of Erik’s (and co-authors’) papers:

Now I don’t necessarily agree with the causality here — that a new simpler description always arises. However, this is a common thing in nearly all sciences: physics stops talking about individual atoms and instead uses thermodynamic potentials and emergent concepts like entropy and temperature, biology stops talking about chemistry and moves up to cells and organisms, and economics stops talking exclusively about individual agents and instead has relationships between macroeconomic aggregates. There are new degrees of freedom that are more efficient to use to describe systems as they become more complex.

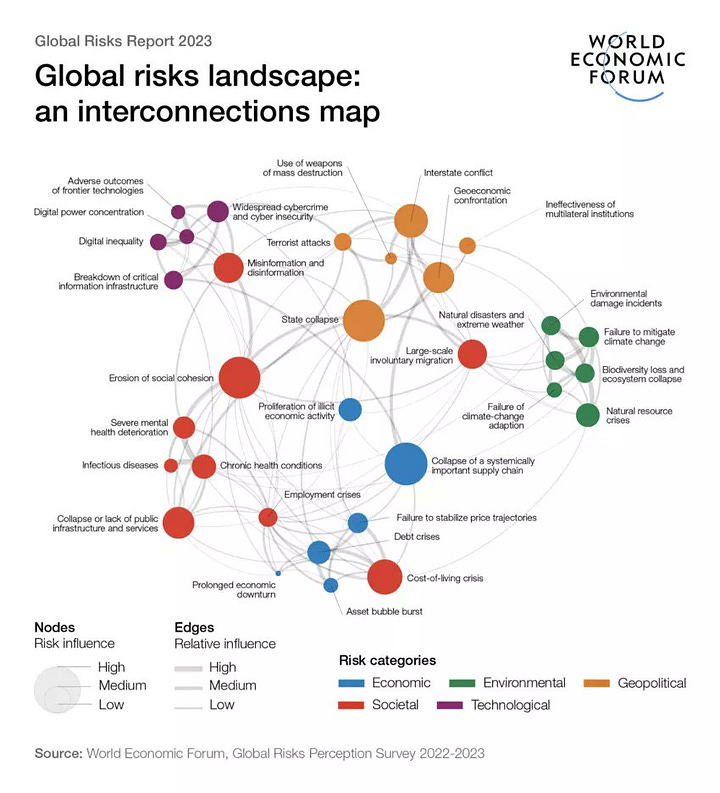

Effective information and causal emergence immediately sprung to mind when I saw the various graphics being used to describe polycrisis. For example:

The idea of polycrisis might be useful to describe crises at this higher level of organization — e.g. economics as a social process interacting with technological innovation to create a global war. Now these don’t have to be the correctly identified degrees of freedom whence polycrisis emerges. We’ll just keep the part where polycrisis is a manifestation of a more useful degree of freedom for describing a higher level organization versus lower level degrees of freedom. The current language used to describe these underlying degrees of freedom are our typical “monocrisis” events in history — recession, war, pandemic.

Another source of insight for constructing a useful definition of polycrisis is going to be dissipative structures. Dissipative structures are processes that arise in systems far from thermodynamic equilibrium. A good example is a convection cell. The purpose of a dissipative structure is to consume free energy and produce entropy. My extremely reductive view of the universe appears in the graphic at the top of this post where we can think of various processes being more and more complex convection cells that dissipate available free energy — converting a few high energy photons from the sun into many low energy photons while consuming some source of free energy to do so (a resource).

Each one of these processes adds not just the ability to produce a bit more entropy but also increases the complexity of the system. However, there is a line that I’ll call the ergodic line such that above the line systems can fail to be ergodic because the mediating agents have, well, agency. Below the line, the agents are things like atoms and molecules — they will always visit every part of the available state space (ergodicity). There’s a second law of thermodynamics for these kinds of agents. Therefore disruptions to lower level processes will generally re-form — if you stir a pot of hot water, the convection cells will return.

There is no second law for agents above the ergodicity line. Humans can suddenly panic and fail to explore the state space. This is the point put my information equilibrium description of a demand curve. In the first animation, agents (dots) are fully exploring the state space under the budget constraint (black line), purchasing goods of quantities x₁ and x₂ at prices p₁ and p₂. The expected value of this process (black dot) produces a demand curve for x₁ as you increase the price p₁ (jagged blue line):

However, as humans are not atoms mindlessly exploring phase space, they can bunch up and the demand curve fails to function:

In the supply and demand picture, prices fall below equilibrium — in a macro AD-AS picture the price level and thus the economy at large falls below equilibrium. In general agents above the ergodic line explore their available state space more when they do not expect to lose too much if that exploration goes awry. That exploration of the state space is what keeps the system — especially economic systems (trade, innovation) — functioning. Panic and groupthink such that all the agents enter similar states is a uniformly negative shock.

Our two insights for defining polycrisis here are that 1) we’re dealing with human systems above the ergodic line and 2) in the systems above the ergodic line, shocks will tend to be bad. Functioning means functioning; failure means some kind of crisis. Or another way: happy systems are all alike; every unhappy system is unhappy in its own way. Polycrisis can lead to a failure of an entire system like international trade — a whole convection cell that fails to reorganize in a period of time that is short on a human scale.

To jump back to a point made by both Dan Drezner and Noah Smith — that some of the purported interactions and the directions of the arrows were in fact beneficial interactions. The pandemic lockdown in China meant inflation wasn’t as bad; high oil prices encourage clean energy and can lead to growth in that sector. However, these cases are more evidence that things could have been worse — i.e. a failure of polycrisis to fully enhance the suck — than actual amelioration. This also illustrates the drawback with those polycrisis graphics above and pointing directly to any particular interaction. If we’re truly talking about an emergent property of complex systems — that complexity field — then, as per the causal emergence picture, the individual arrows and nodes on the polycrisis diagrams are not the correct degrees of freedom and several connections will appear to “go the wrong way”.

So to summarize:

Polycrisis is an emergent dynamic from systems as they become more complex

Polycrisis is failures in multiple processes at or above the human scale

Polycrisis failures are generally bad, reducing the efficiency of systems even causing them to stop completely

Polycrisis failures of processes and institutions do not reconstitute themselves in a period of time that is short compared to human time scales

Complexity field = trust?

I’m giving away my hypothesis with the title of this section, but since I also gave it away with the title of this post it’s best to get right to it. The complexity of human civilization is built on a lot of institutions that require trust as a resource — I trust you to do your bit, and you trust me to do mine, and together we will be able to live a better life. Some technologies really help economize trust. Money, for example, reduces my need to have a lot of trust in any particular individual human for economic transactions — I just have to trust the entity issuing it.

As a microcosm of human civilization, we can look at the theory of the firm and transaction costs. The main idea is that trust lowers transaction costs — a contract between agents with a lot of trust do not need to be as spelled out (thus requiring less time and effort) as one between erstwhile enemies. At the individual level, getting a loan for a small amount of money requires less trust (but a higher interest rate on a credit card) than for a larger amount (a home loan).

Going back to Dan Drezner’s second quasi-definition of polycrisis, the complexity of modern society means individuals use systems every day without knowing how they work. As a physicist, I know some rudimentary stuff about how planes fly, electricity flows or computers calculate. But I have no idea how the antihistamines I’ve taken for allergies works or NOTAM functions — I trust that they do. And the vast majority of the air traveling public probably has no idea how planes fly (in part due to some weird education on the subject) but trusts that they will — or that there are systems in place to handle issues as they arise. As Drezner says, even to experts the systems they study may be largely inscrutable3.

However, there is a massive amount of trust underlying civilization — social trust is the emergent quantity of complex non-ergodic agents just like entropy is the emergent quantity of enormous numbers of atoms. A failure of trust — a global bunching up in the state space of opportunities — would lead to a massive number of crises that might even seem unrelated on the surface: wars, recession, institutional failure. Polycrisis.

Crisis of trust

It’s pretty ironic that David Brooks was writing about a crisis of trust in the Atlantic a couple years ago. I think he is genuinely describing something that is occurring in the US — but he is an agent of polycrisis chaos, not a messenger. To me, one of the largest degradations of trust in the US is due to the lack of elite accountability. At the lowest level, Brooks and other members of the Op Ed cabal can get paid to write stuff that is just facile or wrong with zero accountability. At the highest level, the rich and powerful get away with literal crimes.

Peter Turchin has his “elite overproduction” hypothesis (that Noah Smith has also discussed) — that a society can “overproduce” a class of people who believe they should be part of the elite and therefore act out if that socioeconomic status is not granted. Oversimplifying, but too many PhDs produces a large cohort of frustrated entry level workers with PhDs. I think this misses the mechanism — a failure of trust. Trust not just that putting work in will result in the expected outcome, but that meritocracy — the system you buy into when going to college — is a social convection cell you can rely on. When you see an elite who purportedly got where they are on merit post the most asinine tweets, your trust in meritocracy is degraded.

Social media is an exacerbating factor of the crisis of trust. Now that we can see what the elite think, it’s pretty obvious that a lot of them aren’t very different from you or me — they just lucked out in accidents of birth or networks. In addition, social media has allowed us to directly question the elite; not only have the elite have freaked out about this, but the answers we’ve gotten don’t inspire confidence. Plus, with all the misinformation that’s now readily accessible we’re either confused about what to trust or trusting whichever version is more satisfying.

Erik Hoel has referred to the effect of social media as returning humans as a species to a “gossip trap” — his solution to the “sapient paradox” that modern humans were around for over a hundred thousand years before Göbekli Tepe pops up. I’d say the idea that the complexity of civilization is built on emergent trust is a kind of dual description to his. We as humans needed to invent the technologies of trust before civilization could get started. Organized religion and calendars are probably early technologies of trust that created the possibility for civilization4.

If the complexity of civilization runs on trust, then social media misinformation and lack of elite accountability consuming that resource and converting it to high entropy waste is a recipe for polycrisis. Some industries and political factions benefit from corroding trust in institutions such as science or government (e.g. fossil fuels in regard to global warming; conservatives in regard to social programs). This is in part why I am concerned about the near-term future of the US and the world. A network of trust collapsing on a large scale — a polycrisis — could be behind the late bronze age collapse or the fall of the western Roman empire; there’s no reason why such a thing couldn’t happen here and now.

Noah Smith writes of resiliency in our systems such as global trade, Nassim Nicholas Taleb writes of anti-fragility. Different components of civilization rely on different kinds of trust in different ways so there is no doubt there is some amount of robustness5 in our systems — in particular our economic institutions have been constructed to thrive in environments of limited trust (but with the cost of externalities). However, we have a political party in control of one legislative house holding the trust in the US financial system hostage. A small-scale version happened in South Korea recently — “the refusal of a government entity to honor a debt payment shocked money markets”. Trust can evaporate in an instant even in our systems built on distrust.

Is polycrisis useful?

The question of whether polycrisis is a useful concept will take a lot more than a few blog posts and a couple of working papers to nail down. I think it is useful for the reasons outlined above — there is a theoretical possibility of an emergent quantity our complex systems rely on as a resource that would lead to multiple crises in its absence. I tried to motivate trust as at least a useful analogy for this quantity, if not it’s actual manifestation.

And if we’re trying to do real synthesis, I think there’s a good case to be made that many of the current problems in the world result from a lack of transparency and elite accountability — from authoritarian governments or political parties unaccountable to their constituents in the thrall of disinformation to elite malfeasance going unpunished. Failures of pandemic responses derive from doubt peddled by politicians and grifters. Our inability to address the climate crisis comes from a lack of follow through from major players as well as disbelief that the responsibility will be shared equitably. Right wing fascist and anti-democratic forces are mobilized by an elite that doesn’t want democratic accountability.

Regardless, I think there is at least a question as to whether it’s more than just omnishambles and polycrisis is a reasonable proposal.

“Information theory provides shortcuts which allow one to deal with complex systems.” That’s the opening line to the abstract of Fielitz and Borchardt’s paper defining information equilibrium.

Possibly apocryphal quote attributed to Harold Macmillan.

I actually use this as a motivation for the information equilibrium approach to economics. Humans are extremely complex — inscrutable — and so your theory of economics should not start with ideas about how humans think.

This is also one reason why I don’t see the sapient paradox as a paradox. Technology development before the advent of the written word would be slow and the development of e.g. farming seems to coincide with an exogenous environmental shock with the end of the last ice age.

Taleb has a particular distinction between resiliency, robustness, and anti-fragility. I am using the word generically.