In his famous "Cargo Cult Science" commencement address, physicist Richard Feynman tells a story about a study where rats in a maze were able to distinguish between purportedly identical doors. It’s too long to excerpt, and I think the fact that it’s possibly apocryphal means it’s not as widely quoted of a section; however, it’s still an important part of distinguishing science from pseudoscience.

The gist of the story is that a scientist worked through a bunch of different possibilities — eventually documenting all the things they had to do in order to render the doors indistinguishable. Lights on the ceiling, scents drifting through the cracks — even mitigating sound by putting the maze on a bed of sand. Feynman then says subsequent studies weren't as careful, and ignored the possibilities of visual, olfactory, and auditory cues that had been carefully worked out by that scientist. Ignoring the pitfalls and caveats carefully worked out in previous studies was a sign of "cargo cult science" — pseudoscience with the outward trappings of science.

An analogous situation appears to be happening in econometrics: the literature of carefully determined caveats and conditions for instrumental variables (IV), difference-in-difference (DID), and regression discontinuity (design) (RDD) tests is hand-waved away. We don't really need parallel trends. We don't really need monotonicity. We need this study to produce results, caveats and conditions be damned.

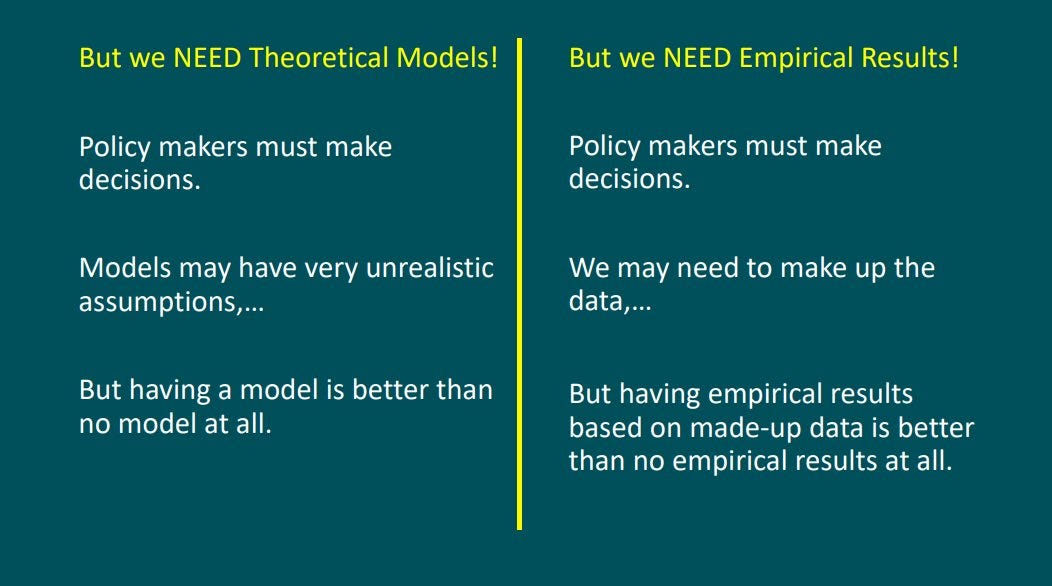

Paul Pfleiderer in a talk about Chameleon models — a problem with caveats in theoretical models in economics — put this drive to produce results in a slide comparing theoretical and empirical results:

“But having empirical results based on made-up data is better than no empirical results at all” is supposed to be an obvious critique of taking this approach to theory. However, it seems that every single econometric study I have looked at in the past couple years has significant issues with their empirical design.

I’ve seen papers with RDDs using higher order polynomials (twitter thread here) and IVs that ignore monotonicity in favor of a new easier-to-satisfy average monotonicity (twitter thread here) as well as exclusion restriction (twitter thread here). Even the paper about the “empirical revolution” in economics cites a study (from the same author as the paper) where omitted variable bias is dismissed with some hand waving that doesn’t appear valid.

The latest cargo cult econometrics is in a paper from Analisa Packham [pdf] that tells us syringe exchange programs (SEPs) increase mortality due to injected opioids. It was written about recently in The Economist because of course it was. This paper is a DID analysis. Which fundamental condition did it hand wave away you ask? Why, it was the parallel trends assumption!

DID is critically reliant on using the trend in a control (untreated) group to determine the counterfactual in the treatment group. There is no data that can tell you what this counterfactual actually is — it’s a counterfactual. It didn’t happen. If your trends in control and treatment groups are parallel prior to treatment, there is at least some evidence for what that counterfactual might be. In the paper, they refer to it as testing for diverging pre-trends.

If you have diverging pre-trends, then as the figure shows you have no idea what the counterfactual is:

I show a few possible ways to try and extract a counterfactual but all of them have issues1: ignoring the control group, lack of causality, ignoring the pre-treatment data. Basically, there is no evidence that the counterfactual is anything except your actual data from the treatment group — there’s no effect.

This should be a ridiculously obvious condition because who sets up their SEP in a county where injection drug use and/or HIV/hepatitis incidence has been flat? Almost certainly this paper is seeing a rise in injection opioid mortality because the county instituted SEPs in response to an opioid epidemic — causality going the other way2.

One possible mitigation here is discussed in Kahn-Lang and Lang (2019) [pdf] — if you don’t have similar trends, then similar levels can give you some assurance that you can identify the counterfactual that is the linchpin for the entire DID analysis:

So what does the aggregate data in Packham’s paper look like:

Neither the parallel trends nor similar levels conditions appear to be met — and the latter is already a relaxation of the conditions for a DID. So what does the paper do? It just asserts that they are in fact met by extensive use of BS (a technical term).

Figure 1 plots the difference-in-differences coefficient estimates […] Prior to the introduction of a SEP, estimates are all statistically similar to zero, indicating that opioid-related mortality trends in each group were not diverging prior to the program opening.

But, but … you just normalized the most critical point away — the one at year = −1. We could never tell if causality was the other way around, that starting the SEP was prompted by an increase in opioid-related mortality, if you do that. The period between the rise of opioid deaths and instituting the SEP is uncertain for up to two years due to normalizing the pre-treatment period and binning effects.

Prior to being normalized away, Packham additionally deletes the zeros as part of hand-waving away the issue of diverging pre-trends and vastly different levels.

Because drug-related deaths are a relatively rare event in some areas, for my main analysis I omit counties that experience zero reported occurrences in any year during the sample period. As suggested by Kahn-Lang and Lang (2019), this sample restriction allows treatment and control groups to be more similar ex ante, which places less importance on functional form assumptions of the difference-in-differences model, although below I show that estimates are insensitive to this omission.

This is 1) wrong and 2) just a straight up misrepresentation of Kahn-Lang and Lang (2019). Because the differences early in the sample are separated by a factor of 3-4, simply deleting zeros does not make them more similar (you’ll still have single digit levels compared to double digit levels)3. And Kahn-Lang and Lang (2019) does not say "just delete zeros to make the treatment and control more similar" — they advise studies to explain why the levels are different and why this mechanism doesn't impact trends. They literally say that in the abstract4 (emphasis mine):

We argue that 1) any DiD paper should address why the original levels of the experimental and control groups differed, and why this would not impact trends, 2) the parallel trends argument requires a justification of the chosen functional form and that the use of the interaction coefficients in probit and logit may be justified in some cases, and 3) parallel trends in the period prior to treatment is suggestive of counterfactual parallel trends, but parallel pre-trends is neither necessary nor sufficient for the parallel counterfactual trends condition to hold. […] Moreover, we underline the dangers of implicitly or explicitly accepting the null hypothesis when failing to reject the absence of a differential [ed. i.e. diverging] pre-trend.

The paper addresses nothing of the sort. In fact, an explanation for why levels might be higher in the treatment group (aside from just population level bias) is proffered as a mechanism for the increase in mortality:

Third, communities that build a SEP may attract nearby drug users and/or signal that they also support more police leniency for drug users, lowering the legal risk of using opioids.

This is entirely backwards from what you want to be doing if you’re following the suggestions of Kahn-Lang and Lang. It’s also entirely backwards in assuming the causality you want to be proving.

Like the IVs and RDDs I mentioned above, this DID is just hand-waving and BS-ing away the caveats and conditions prior scientists devised to make sure the technique was valid — something Feynman pointed to as a sign of cargo cult science. Their rats are finding the food, but it’s because they can hear the difference in the maze walls — not from the effect of your memory enhancing treatment. The plausible conclusion is still plausible: that the SEP was started in response to a rise in opioid usage and attracted users from surrounding counties — it didn’t cause mortality5.

Like Paul Pfleiderer points out above — apparently getting results from this paper was so important, we can just ignore the pitfalls of DID analysis. Apparently it was so important to produce results that it doesn’t even matter if the data is bad:

Yes — if the data is bad you shouldn’t use it.

The excuse for dropping monotonicity for average monotonicity6 was that random judge assignment was just so useful (read: available) an IV to crime studies that some way needed to be devised to make it work — despite studies that show judges will reduce the likelihood of granting parole to near zero just before lunch and increase it after, or that some judges are uniformly more harsh towards people of color breaking the monotonicity assumptions.

Now we’re hand-waving away parallel trends for DID. No study is so important that we need results regardless of how much you have to bend the underlying assumptions. This is not science, but cargo cult econometrics.

It may seem like all of these would still show the treatment data is higher — that’s true because it is! The data is actually higher. You likely wouldn’t have done the study otherwise. However, because of the diverging trends you have no idea what the counterfactual is so you have no information about the causal effect of the treatment which is what we are purportedly after.

It’s also possible that administrative data would better capture deaths from opioid injection in the vicinity of SEP sites (drawing opioid users to them, injecting nearby) creating a streetlamp-like effect.

If it does, then as Feynman says, show that it does. That’s part of examining your own results by “leaning over backwards” to show how you could be wrong.

Their point 3 is basically what I was talking about when discussing the counterfactual above.

In what is almost a tragic comedy, the study shows starting SEPs reduces overall mortality with almost the same confidence it shows SEPs increase opioid-related mortality. And completely on-brand Packham just asserts a misrepresentation of the graph. “Total mortality rates decrease in SEP-adopting counties both prior to and after the opening of the program.” But they decrease a lot more after the SEP starts! And we don’t actually know about the year prior because you normalized it away!

Note: it took me less than 5 minutes to find two stories of HIV outbreaks due to increasing injection drug usage causing the opening of SEP sites:

https://www.theintelligencer.net/news/top-headlines/2022/10/commission-city-back-needle-exchange-program/

https://www.cincinnati.com/story/news/2019/01/22/boost-access-syringe-exchange-curb-cincinnati-hiv-spike-cdc-says/2643539002/