A list of valid and not so valid complaints about economics

There was a paper from NBER that Noah Smith tweeted last night that showed that log-linearization wasn't really a big deal in a nonlinear New Keynesian DSGE model. Since this was essentially an explicit example of where a common complaint about economics and Taylor series approximations wasn't really valid, I was inspired to construct a (potentially growing) list of complaints about economics when it comes to science and mathematics based on my many posts on the subject.

In constructing this list, I found that several of these posts are in the top 5 most viewed posts (namely, #1, #3, and #5) making me realize that I am probably mostly seen out in the econoblogosphere as a physicist critiquing various approaches to economics rather than as the crackpot developer of the information transfer framework. This may bode well for my forthcoming book which is more critique than information theory.

Update 4 November 2016: I want to emphasize that this is a list of complaints about economic methodology with emphasis on scientific method and mathematics. It is not supposed to be a list of effects economics includes/excludes or complaints about specific models. They might appear as examples (e.g. I mention DSGE below), but are not the primary complaint. It is primarily intended as a corrective to many complaints in the econoblogosphere that "economics is unscientific because of X" written by a scientist, where the X's are the top line complaints below.

Update 13 March 2017: I am adding some popular books critiquing economics where they might fall with the complaints below.

We'll start with the invalid complaints ...

* * *

Not so valid complaints about economics

"Economics keeps only the linear terms of Taylor approximations"

As mentioned above, I wrote an entire post on this. Keeping linear terms in Taylor series is usually fine, so this doesn't work as a general criticism. It could potentially work against a specific model.

"Economics ignores nonlinear models"

Part of this is captured in the post on Taylor approximations and the new NBER article. However, I also wrote a post (that is now the 5th most viewed post on my blog, with follow-up) addressing one of the better empirical arguments against using nonlinear models. As Roger Farmer's beautifully concise post puts it, without hundreds of years of data we can't meaningfully tell the difference between a nonlinear model and a linear model with stochastic shocks.

"Economics makes unrealistic assumptions"

In science, unrealistic assumptions are made all the time. What matters is the end result of those assumptions. This is how physics operates all the time and it is called "effective theory", and "effective field theory" (what Weinberg called phenomenological Lagrangians) is a formalization of the idea for advanced theoretical physics.

I wrote two posts (here, here) about this subject in response to someone making this claim.

"Economics has too much math"

My background is in physics, but I've been studying economics and finance for over 10 years. My experience is that the level of math being applied is generally appropriate to the problem at hand. Some aspects of economics appropriately aren't very mathematical. Development economics comes to mind.

However, this complaint tends to be made about macroeconomics and the study of the business cycle. These are two things that we would not even know exist without mathematics. For example, no single person can see all the output or all the employed and unemployed people at once. This data must be compiled, which generates numerical quantities. Additionally, prices are numerical. How does one deal with the rise and fall of interest rates without mathematics?

I've written three posts (here, here, and here) on this in which I've tried to understand this charge -- what is it really trying to say? There seem to be many different reasons that range from genuinely feeling left out of a field that has an important effect on people's lives to avoiding testing one's theory against empirical data.

Added 5pm PT. There is a possibly valid version of this in that a lot of economics is written too mathematically; I address this below.

Mathiness

Paul Romer made his big debut in the econoblogosphere with a paper on what he called "mathiness": the lack of technical rigor (Romer used the words 'tight links') in mathematical arguments in economics.

I read through his paper and his specific claims make no economic sense from the standpoint of dealing with the reality of economic systems. It's now my 3rd most-viewed post. I think Romer has touched on something, however, and the issue is really about domains of validity (scope), scales, and limits (see below).

Added 5pm PT. Some people have different interpretations of what "mathiness" is (Romer himself felt misunderstood on this subject [also, Romer's response to my post]). Some people consider "mathiness" to be writing economics with overly formal mathematical symbols, which I address below.

"Macro is like string theory (in a bad way)"

Paul Romer's second big splash was with a devastating critique of macroeconomics. Many of the critiques are valid. However, he uses an analogy with string theory to say macro is unscientific that really misunderstands string theory.

* * *

Complaints that depend on framing

"Economics is bad at forecasting"

This really depends on a lot of factors. Long run or short run? Does the theory actually say the result is forecastable?

DSGE models are designed to forecast over the short run, but appear to be unable to do so -- or do so worse than simple stochastic models. That's a valid complaint!

"Economics can't predict recessions"

Following the complaint about forecasting, we look specifically at recessions. Are recessions random, or are they predictable?

Science can't predict earthquakes, but can predict where earthquakes might occur and levels of strain building up in faults.

Some economic theories predict recessions to be quasi-periodic. But much like the problem with validating the forecasting abilities of presidential election models, we don't have a lot of recessions to work with in the time series. Even a model that predicted the past 3 or 4 recessions (meaning it would have had to have been built in the 80s) could have done so out of luck (although if it matched the severity and duration of those 4 recessions, that might be a real thing).

One should probably hold judgement until we have some evidence that recessions are predictable.

"Economics is unscientific"

Which aspect?

This is not a binary condition, but rather a continuum. String theory in physics could be considered unscientific in the sense that it has little connection to data. However, it's a very scientific extension of standard quantum field theory (you could call it the quantum field theory of strings instead of string theory, and in fact that is the title of a string theory book). So something can really be unscientific in one way, but scientific in another.

As I wrote about here, some aspects of economics appear unscientific (to me) while other aspects don't.

"Economics is not empirical"

Again, this depends on what you are talking about. VAR models are entirely based on empirical data. Sometimes DSGE models aren't compared to data. Sometimes they are.

"Economic quantities are phlogiston"

Added 4 Nov 2016. I remembered this one from Paul Romer's paper, but I think Matthew Yglesias was the source of my own usage with regard to total factor productivity. Phlogiston was originally thought to be the substance contained by combustible materials that made them burn. You can see the obvious circularity in the definition.

Economics introduces many different quantities when describing economic data. Utility, total factor productivity, technology shocks. Some are unmeasurable (utility). Some of these are dangerously close to phlogiston (TFP).

However, introducing new and possibly unmeasurable quantities has long been a part of science. Sometimes they end up being phlogiston. Sometimes they end up being momentum ("quantity of motion" per Newton). It may be true that utility is unobservable. However the quantum wave function is also unobservable.

Therefore, this should be considered on a case by case basis. It is hard to say whether TFP or utility will end up being useful quantities. I personally don't think so, but I also can't rule it out.

* * *

Valid complaints

The identification problem

I think Paul Romer did a delightful job explaining the identification problem. Basically the idea is that any system with m equations in m unknowns will have way too many parameters. Expectations and nonlinear models make this worse.

"Economics does not appear to treat limits properly"

In looking at Romer's mathiness complaint (above), I realized that the way that economists treat limits (taking variables to zero or infinity) is, in a word, sloppy. This can lead to some serious problems such as producing contradictory results or nonsense. The issue can be related to dimensional analysis and understanding the scales of the theory (Romer cedes that he -- and therefore likely other economists -- ignore scaling).

The basic idea is that 1) 0 and infinity are effectively related by 1/∞ ~ 0, and 2) both zero and infinity are dimensionless (have no units). This means you can never take the limit as time t goes to infinity t → ∞ because one has units (time has units of seconds, quarters, or generic time periods). The same goes for t → 0.

Therefore, if you ever want to take limits, you need to understand what your fundamental time scale t₀ (with units of seconds, etc) is so that you can take the limit t/t₀ → ∞. In physics, we tend to write this as t/t₀ >> 1 (the double greater than signs read as "much greater").

The practical result of this is that there are a ridiculous number of undefined (or implicitly defined) time scales like t₀ hiding in economic theory.

Romer's mathiness complaint ends up being wrong because he didn't realize that his double limit makes zero sense because he effectively takes both t → ∞ and t₀ → ∞ in different orders and (as would be expected) ends up with nonsense.

Now you don't have to actually take the limits in order to have these implicit scales in your model. To my chagrin, I pointed this out about stock-flow consistent models (what is by far my #1 most-viewed post), and received a barrage of comments saying that I didn't understand what I was talking about from people suffering from the Dunning-Kruger effect.

"Economics does not deal with domains of validity (scope)"

Every theory, every model, has a range of inputs over which it is valid. In physics, we call it domain of validity (e.g. Sean Carroll uses the term here and here), but Noah Smith appears to think we call it scope conditions (which I think is actually a sociology term). Regardless of what you call it, economics doesn't address it much. Noah says: "I have not seen economists spend much time thinking about domains of applicability (what physicists usually call 'scope conditions'). But it's an important topic to think about."

It is. It is very important. It is closely linked to the scales and limits mentioned above.

Newtonian physics for example is valid for speeds v where v/c << 1 (the scope) where c is the speed of light (the scale [1]). When v ~ c, then Einstein's theory of relativity becomes important.

Because economic theories have implicit scales floating around, and does't take limits properly, one ends up with a confused mess when one tries to understand the scope of any particular model or formula. For example, I looked at the present value formula (more accounting than economics) in this light.

In a more interesting example, utility maximizing rational agents clearly fail when you have N = 1 agent as shown by many experiments. However, they appear to be a decent approximation when N ~ 20 agents in at least one experiment. As Gary Becker showed, you get the same results from an ensemble of irrational agents as you do from a single rational agent. I made the case that rational agents with many of the properties economists assume (but do not appear to be true of individual agents) could emerge in systems with a large number of agents.

What if there was a group scale of N₀ people (say, 100) [2] which told us that when N/N₀ >> 1 we can assume rational utility maximizing agents? This would make sense of the failure of individual (N = 1) human behavior to appear rational. The rational agent model is out of scope for individual humans.

I am not saying that is definitely true; it is just a possible resolution. It's also a possible resolution that would be clearer to understand if economics treated scopes and scales more rigorously.

Books:

Dani Rodrik's Economics Rules (although I don't necessarily agree with the solution)

"Economics is written too mathematically"

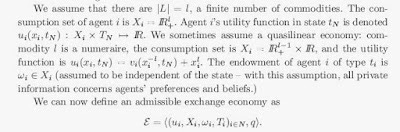

Added 5pm PT. I think some people see this as "mathiness" or "physics envy", but the following snippet (flagged by Duncan Black, himself an economist) is a not-uncommon paragraph from an academic economics paper:

I wrote about this here. I think writing papers in this style obscures more than it illuminates. As I wrote at the link: "There is quite literally no reason for an economist to refer to the real numbers as ℝ or even refer to real numbers at all. To say x ϵ ℝ+ is pretentiousness compared to x > 0." Overall, this is a superficial complaint; the mathematics underlying the terse symbols is usually relevant. It could just be written in simpler, less symbolic language. It really is just an academic culture of writing papers like this (something that "looks like an economics paper").

"Economics accepts stories too easily"

Added 5 Nov 2016. This is probably more a human failing than one specific to economics, but macroeconomic theories are used to produce narratives that are proffered with a certainty that far exceeds their empirical success at describing macroeconomies. I've compiled a list here.

I'm not endorsing the rest of his book or ideas, but Nassim Nicholas Taleb writes about this calling it the narrative fallacy.

Science is primarily as a defense against fooling yourself (Feynman), and narratives constructed by your left brain interpreter are a very seductive way to fail. Data first, story later.

Books:

James Kwak's Economism (although he makes the distinction that what he calls "economism" isn't really economics)

...

Footnotes:

[1] For those interested, c comes from the Latin for "quickness": celeritas

[2] For example in physics, when the number of "agents" (atoms) N >> Nₐ (Avogadro's number ~ 10²³), chemistry and thermodynamics are the valid effective theories of Newtonian and quantum mechanics -- that differ from them quite extensively. Maybe something like this happens in macroeconomics?